Texture Generation

One very entertaining application of deep learning is in style modification and pattern enhancement, which has become a popular topic on the internet after Google’s Deep Dream post and subsequent research and publications on style transfer. Reproducing this research has long been a goal for the development of MindsEye, and now that it is achieved I’m having quite a bit of fun with this playground I built! I have collected the interesting visual results of my work in this online album.

Pattern enhancement, style transfer, and similar effects are new tools based on deep learning that can be applied to an image, but in usage they are just like any other image processing filter. When combined with other components, we can generate or modify images in a carefully designed pipeline; it is the best of these results that you see in published work. This is just like any other image processing pipeline in artistic or computer vision applications in many respects, and many steps have nothing to do with neural networks.

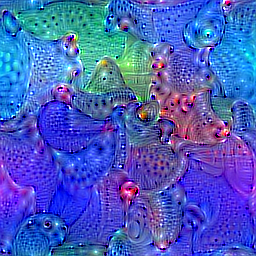

The pipeline we are about to discuss generates tiled textures, suitable for use as texture fill, wallpapers, etc. It works in a fairly simple loop after being seeded by an initial painting process, where the canvas is progressively enlarged while a deep-learning-based model is optimized. This both increases training efficiency and improves multi-scale resolution.

In more detail:

- The initial canvas is painted using a diamond-square-fill algorithm on a toroidal space. (This is a well known and flexible texture generation method by itself.)

- The canvas is then processed using one or more steps, each of which is of one of these types:

- The texture is optimized so that style vector(s) is/are reproduced by minimizing several distance metrics. (The weight vector used to mix these metrics is a useful free parameter.)

- The texture is enhanced so that any patterns that appear (on a given layer) are enhanced. This maximizes the L2 magnitude of the signals on various layers, subject to a weighting vector.

- Criteria in (a) and (b) can be mixed by using a signed linear mix

- After all steps of the iteration are completed, the result is saved, the canvas is enlarged via bicubic scaling, and the process returns to step 2

Step 0/1 - Initial Painting and Style Transfer

Step 2 - Pattern Enhancement

Step 3, etc - Enlarge and Repeat

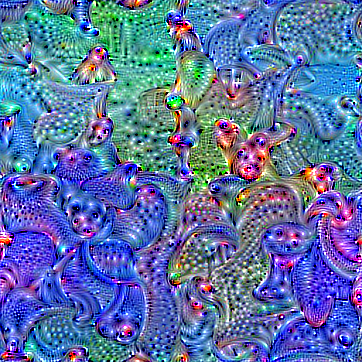

This is very similar to the classic style transfer algorithm discussed in the paper here, which we’ve also taken some steps to reproduce. The process is very similar to that detailed above, but we also use a distance metric to a “content” image to guide our painting process. This largely depends on the weighting between the style criteria and the content criteria. Assorted examples of the product follows:

Well, I hope you found this interesting! If you’d like to generate some artwork of your own, it is easy to clone and run! The notebooks used in this post are here and here; all that is required is Java, CUDA, and CuDNN! Look for my next post where I will discuss running these applications on the AWS cloud, leveraging the Deep Learning Base AMI and P3 gpu-accelerated instances…